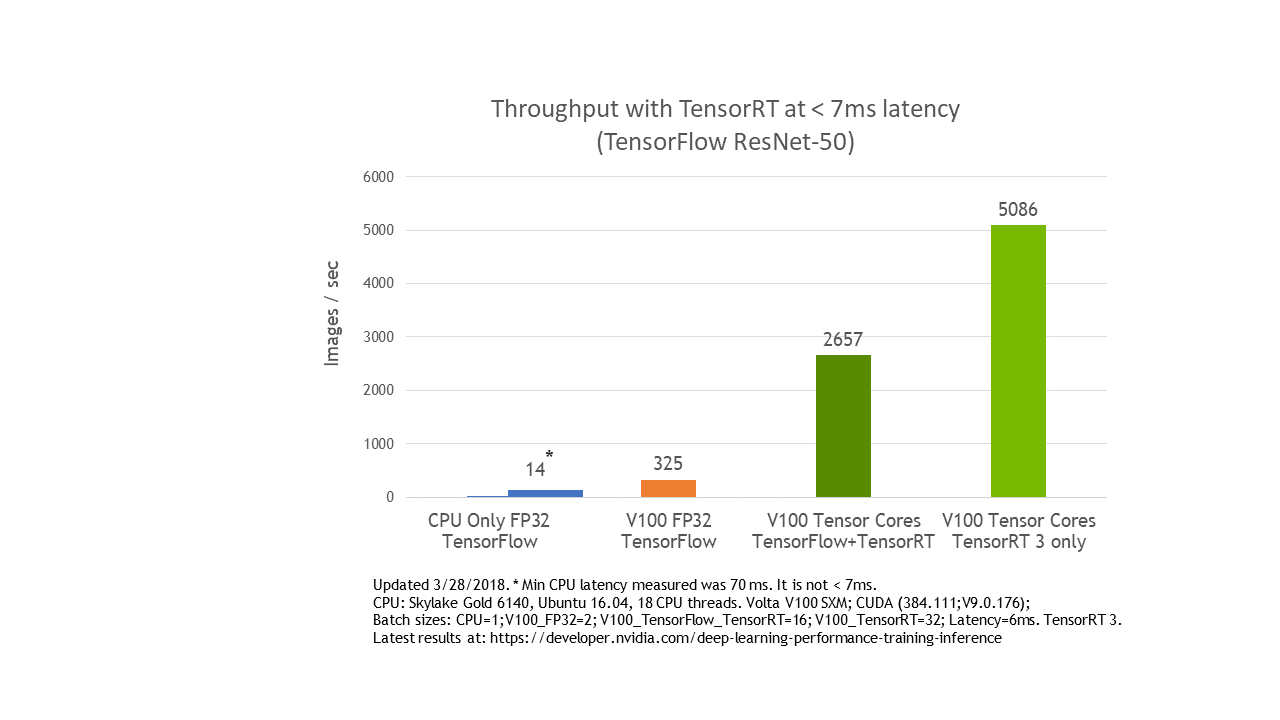

GitHub - brokenerk/TRT-SSD-MobileNetV2: Python sample for referencing pre-trained SSD MobileNet V2 (TF 1.x) model with TensorRT

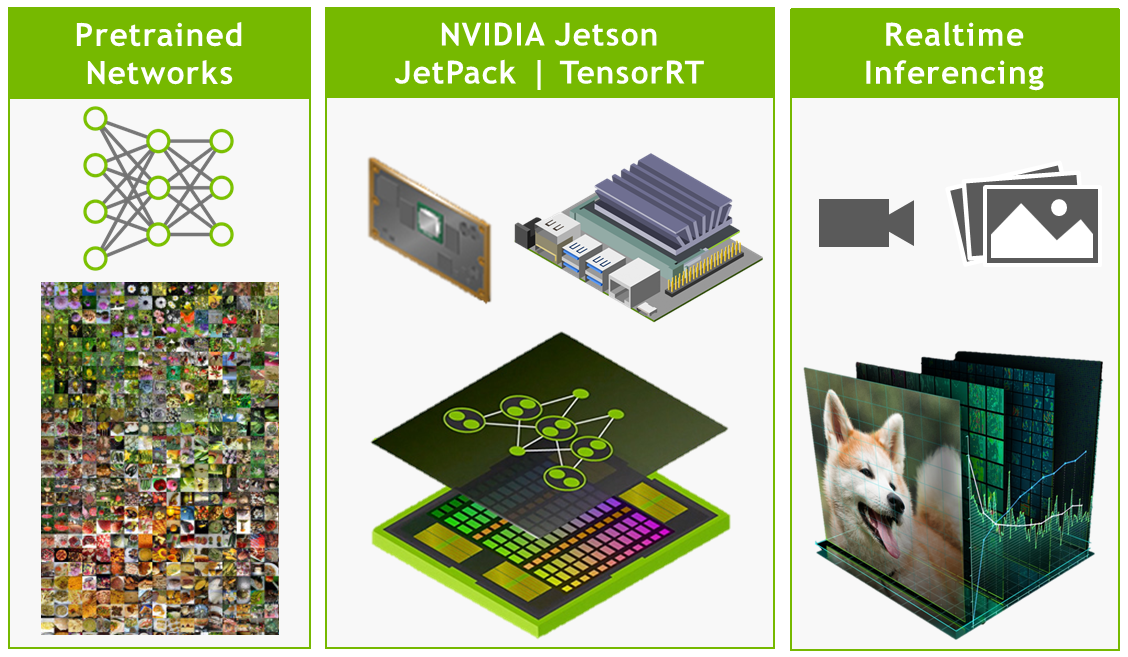

Run Tensorflow 2 Object Detection models with TensorRT on Jetson Xavier using TF C API | by Alexander Pivovarov | Medium