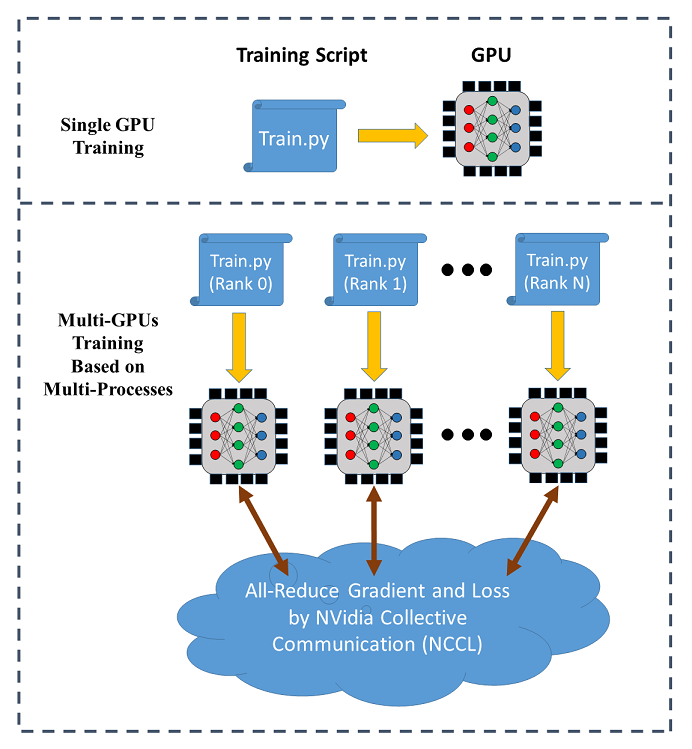

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

Efficient and Robust Parallel DNN Training through Model Parallelism on Multi-GPU Platform: Paper and Code - CatalyzeX

a. The strategy for multi-GPU implementation of DLMBIR on the Google... | Download Scientific Diagram

![PDF] Iteration Time Prediction for CNN in Multi-GPU Platform: Modeling and Analysis | Semantic Scholar PDF] Iteration Time Prediction for CNN in Multi-GPU Platform: Modeling and Analysis | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/55fd0eefc23f262c2875ec4c1c3472a689d88c50/3-Figure1-1.png)

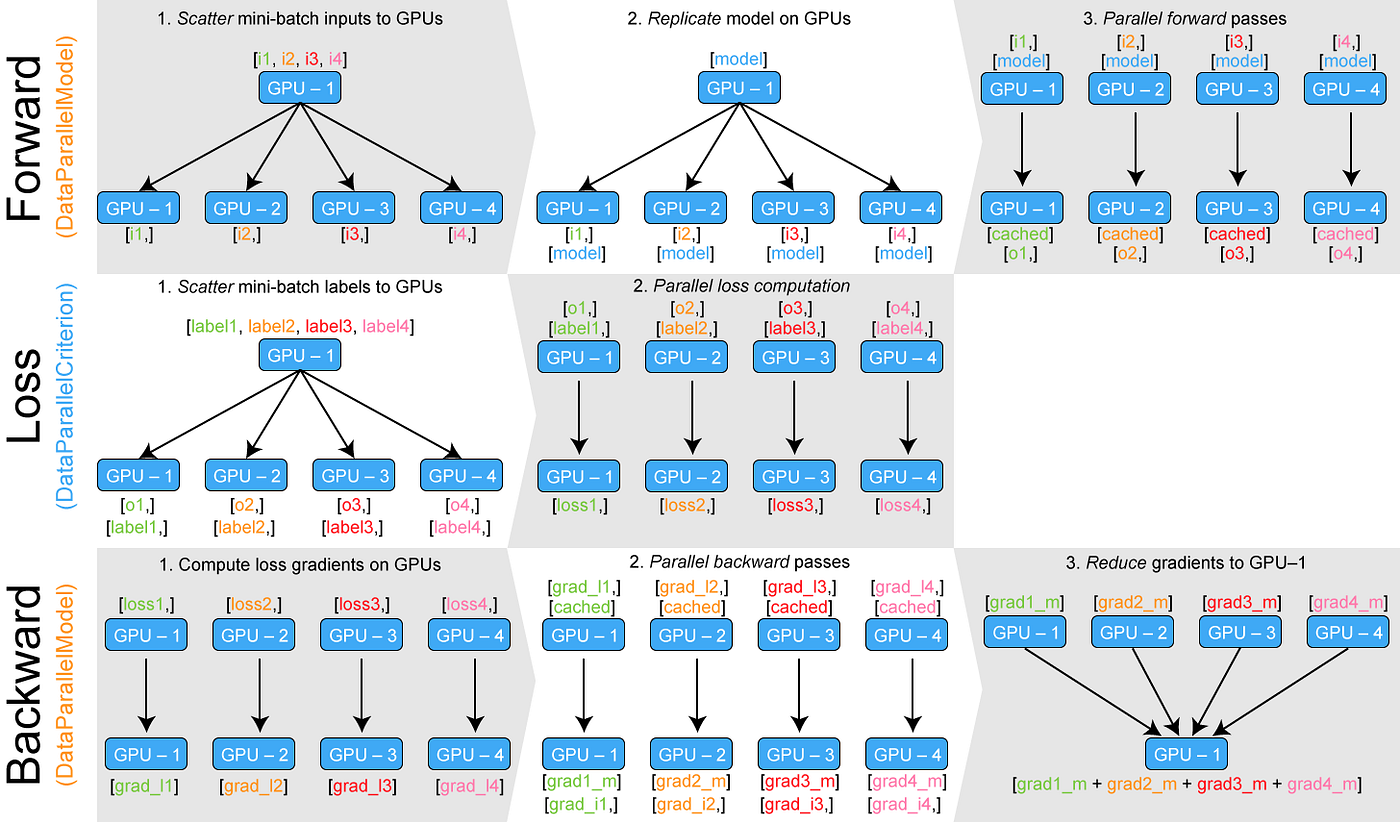

PDF] Iteration Time Prediction for CNN in Multi-GPU Platform: Modeling and Analysis | Semantic Scholar

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research