deep learning - Pytorch: How to know if GPU memory being utilised is actually needed or is there a memory leak - Stack Overflow

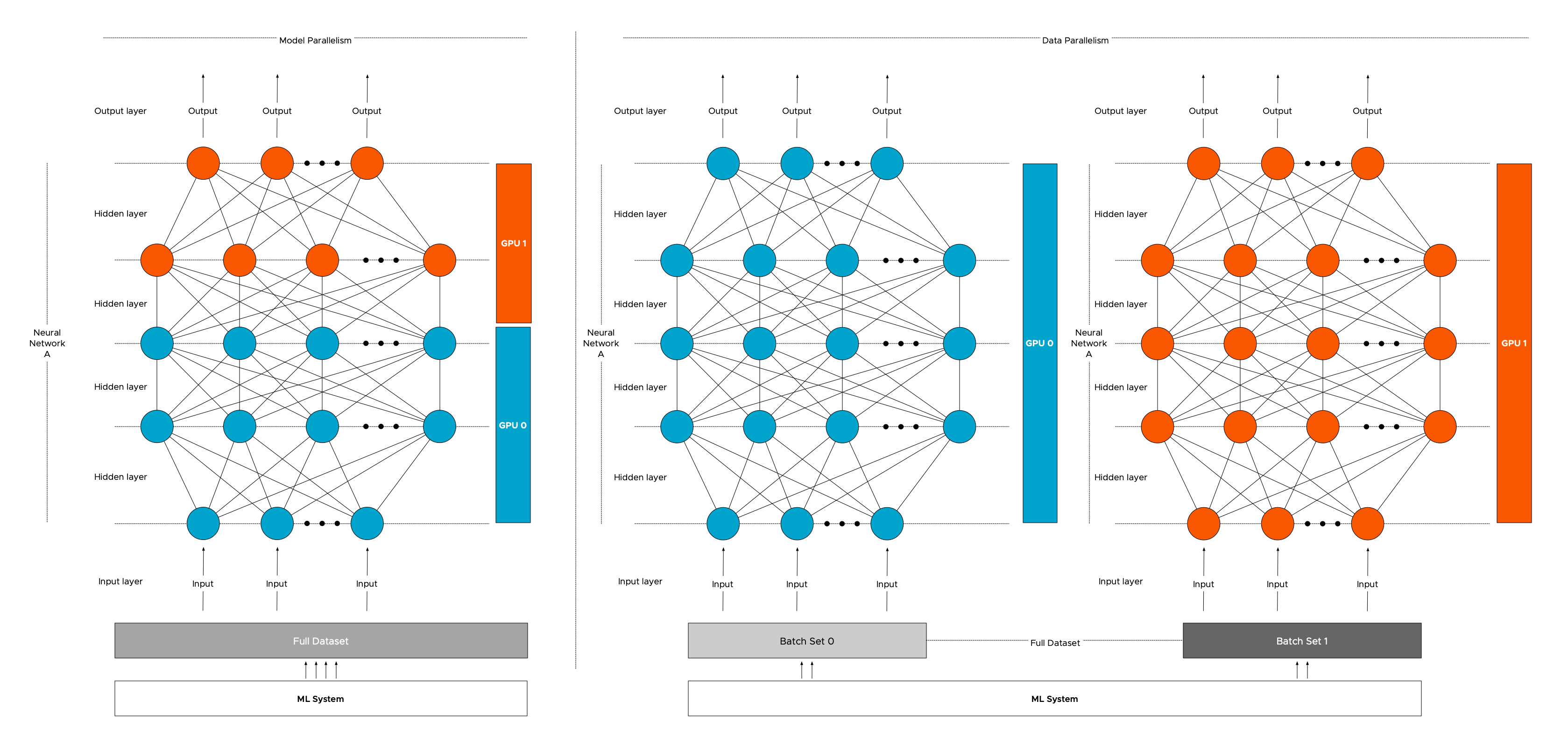

ZeRO-Infinity and DeepSpeed: Unlocking unprecedented model scale for deep learning training - Microsoft Research

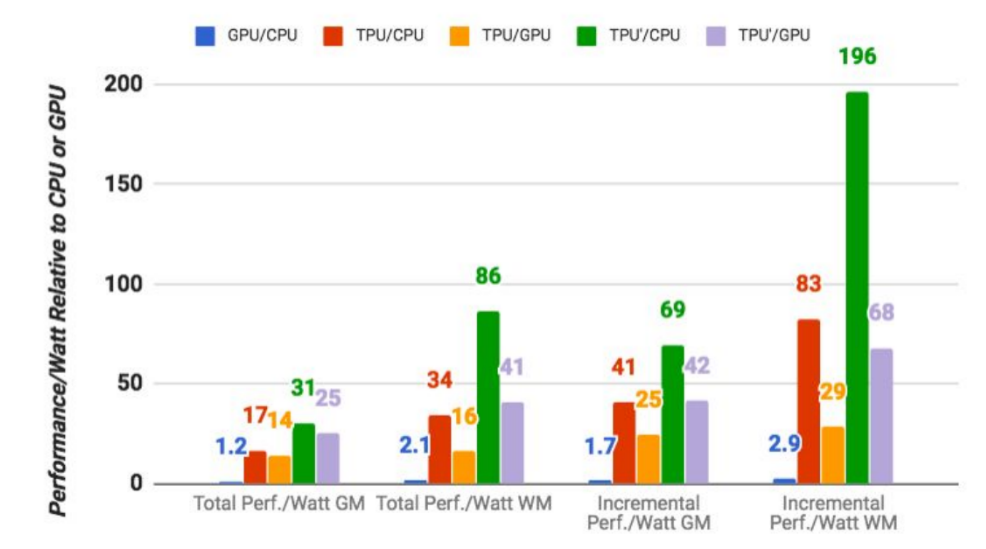

Google says its custom machine learning chips are often 15-30x faster than GPUs and CPUs | TechCrunch

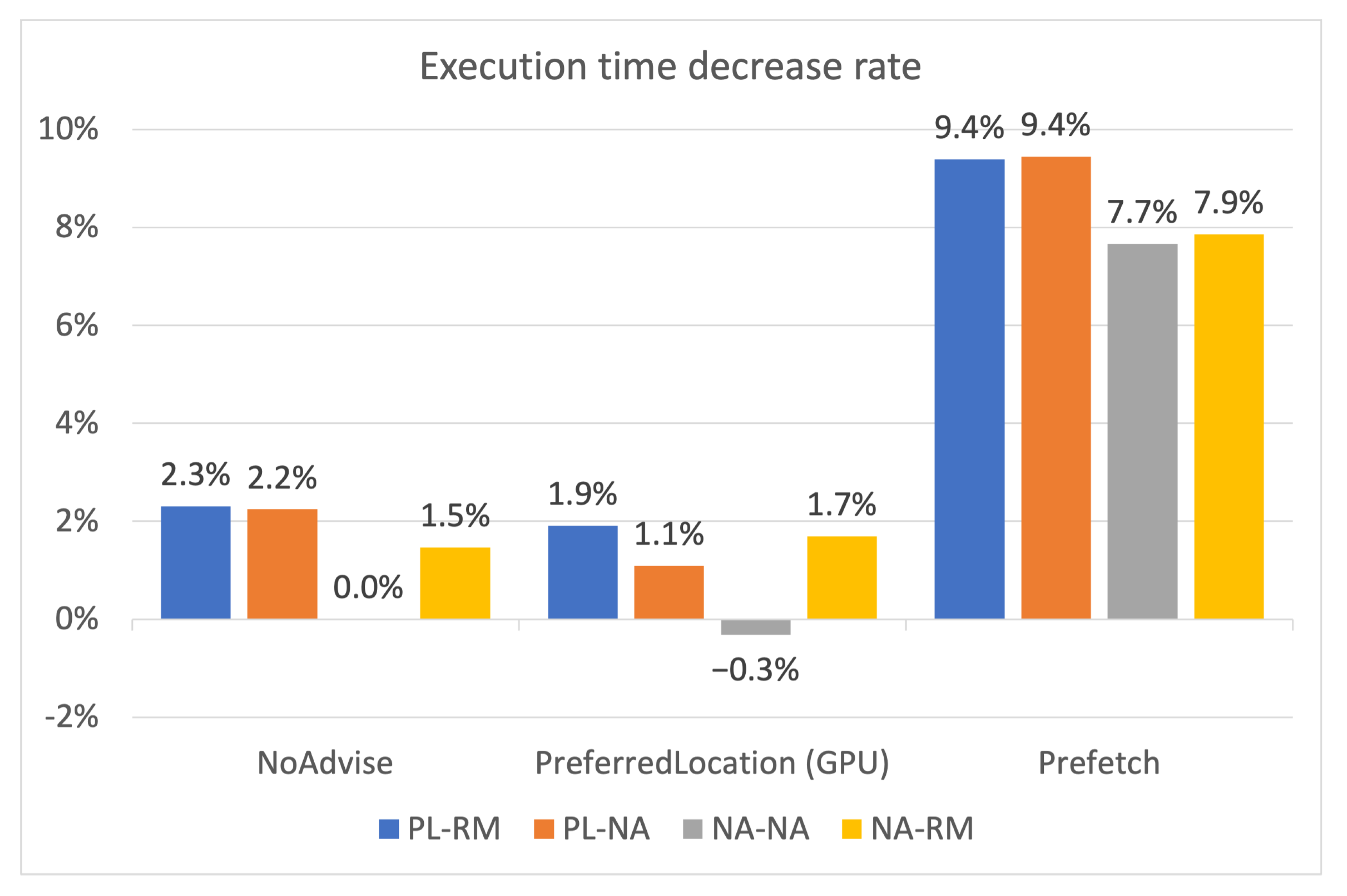

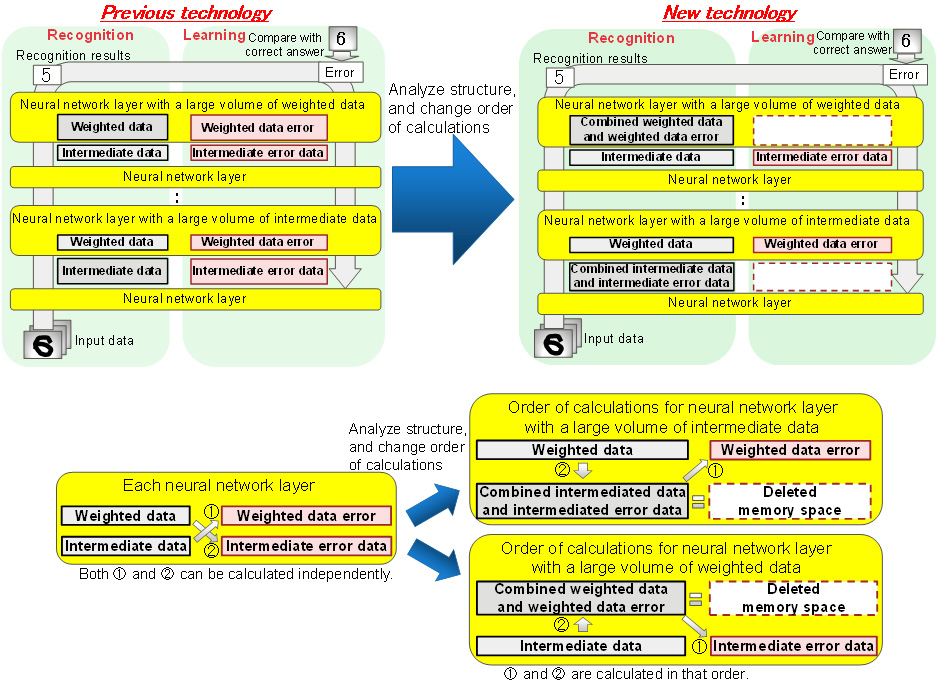

Fujitsu Doubles Deep Learning Neural Network Scale with Technology to Improve GPU Memory Efficiency - Fujitsu Global

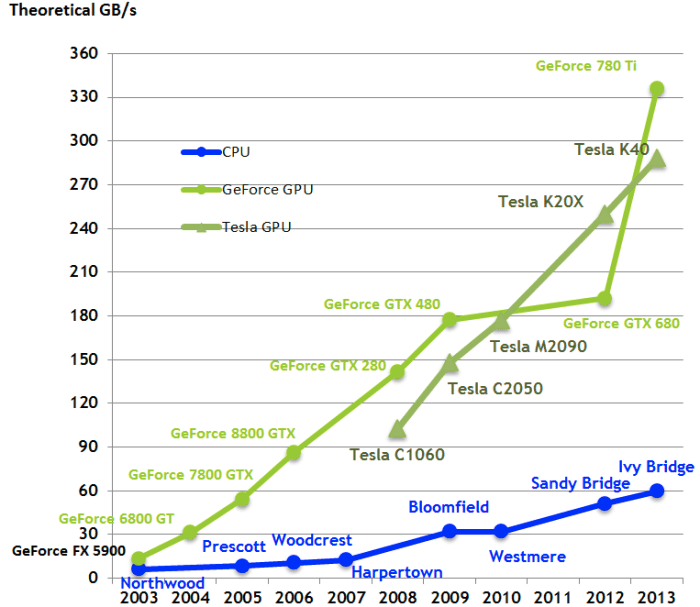

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs 32GB -- 1080Ti vs Titan V vs GV100

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science